Multimodal Information Extraction

Visually-rich Documents; Transformers; BERT; Few-shot Learning; Meta Learning

Time:

Summer 2022

Project: Efficient Few-shot Multimodal Information Extraction in Visually-rich Documents

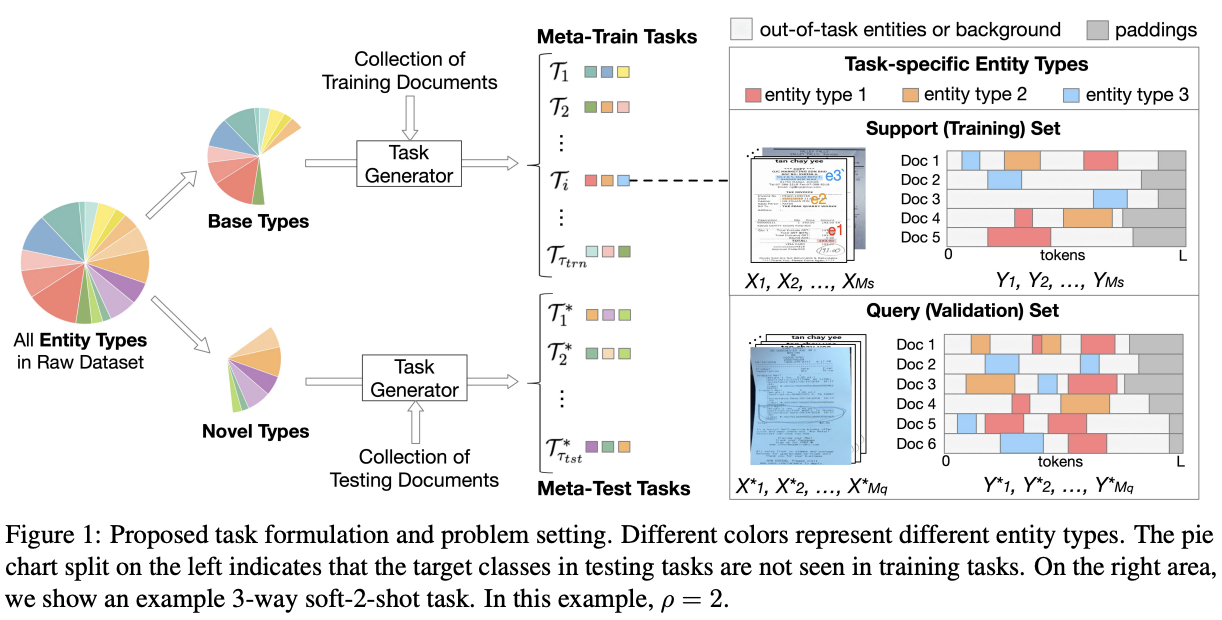

Visually-rich documents consist of three modalities (language, image, and layout structure of contents). The task is to harness meta-knowledge to accelerate the learning process of

- understanding new document types given a pre-trained text-image Large Language Model;

- localizing rarely-occurred key information types from out-of-distribution information.

Accomplishment:

Successfully published two research papers.

Related Skills:

- NLP: Entity retrieval, Multimodal Transformer-based Large Language Models

- Tools: Tensorflow, Jax, seqeval

- Languages: Python

Collaborators:

- Mentors: Hanjun Dai (Google DeepMind), Bo Dai (Google DeepMind), Wei Wei (Cloud DocAI & Core ML App)